AI Code Review Tools That Speed Up Every Pull Request in 2026

AI Based Code Review Tools | TL; DR

In 2026, AI code review tools have evolved from simple linters into "agentic" partners that understand full system architectures and business logic.

The top tools are now categorized by their primary strength: pull request (PR) automation, security-first analysis, or local privacy.

Top AI Code Review Tools (2026)

- Qodo (formerly Codium): Best overall for enterprise-scale reviews. It features a "Codebase Intelligence Engine" that understands cross-repo dependencies and validates PRs against Jira or ADO requirements. It is noted for high-speed, detailed severity-based reporting.

- CodeRabbit: Leading tool for PR workflow automation. It provides instant summaries, architectural diagrams, and one-click AI fixes directly in GitHub or GitLab. It is highly valued for reducing manual review time by up to 50%.

- Snyk Code AI (DeepCode): Best for security-centric reviews. It uses a "symbolic AI" engine to detect complex vulnerabilities like SQL injection or insecure data parsing by tracing data flows across the entire application.

- Aider: Best for terminal-based, local-first reviews. It acts as an open-source pair programmer that works directly with your local Git repository, making it ideal for teams with strict data privacy requirements.

- Amazon Q Developer: Best for AWS-native stacks. It identifies inefficiencies and security risks specifically tailored to AWS best practices, such as IAM role misconfigurations or Lambda optimization.

Key Capabilities in 2026

- Architectural Context: Modern tools maintain a persistent map of your system, allowing them to detect when a change in one microservice might break an API contract in another.

- Automated Testing: Tools like Early Catch now generate and run unit tests for every PR to verify that the AI-suggested fixes actually work before they are merged.

- Governance & Compliance: Enterprise versions now include "zero-retention" modes and local model support (via Ollama) to ensure code never leaves the organization's infrastructure.

Table of Contents

- What Are AI-Based Code Review Tools?

- Advantages of AI-Powered Code Review

- Immediate Code Review: How AI Speeds Things Up

- Improving Accuracy with AI-Powered Code Analysis

- AI-Assisted Assessment of Coding Practices in Modern Code Review

- AI-Based Code Review Platforms for Compliance and Standards Checks

- AI vs Manual Code Review: Why It’s Not Either-Or

- AI-Enhanced Code Reviews: A Human-in-the-Loop Model

- Effectiveness of AI in Code Review: What to Look For

- Limitations of AI Code Review: A Realistic View

- What Are the Best AI Code Review Tools? (Free & Paid)

- AI Code Review Best Practices: From Setup to Team Adopt.

What is AI Based Code Review Tools?

An AI code review tool acts as an always-on, hyper-efficient digital teammate. It doesn't replace human reviewers, but it significantly augments their capabilities.

This frees up your team to focus on complex architectural decisions and core business logic, rather than chasing minor syntax issues or common anti-patterns.

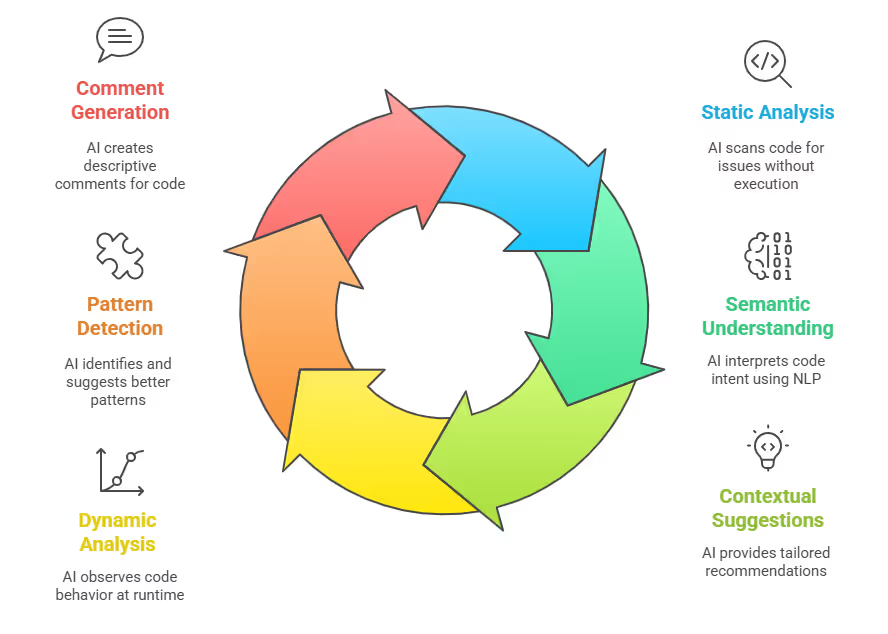

AI Assisted Code Review: Smarter Static Analysis

Traditional static analysis tools scan code without executing it, looking for predefined issues. AI takes this to a new level. By using Natural Language Processing (NLP) and Large Language Models (LLMs) trained on vast code datasets, AI tools understand the intent behind your code, not just its structure. This deep semantic understanding is a key development shaping software engineering.

This means AI can:

- Identify subtle code smells: Beyond simple complexity metrics, AI detects nuanced patterns that often predict future maintenance nightmares or performance bottlenecks.

- Contextualize suggestions: Instead of generic warnings, an AI tool might suggest a more efficient algorithm based on your actual data structures or typical application access patterns. For example, in a Python backend, an AI might recommend using a

collections.dequeinstead of a list for frequent appends and pops, recognizing the performance implications. - Learn from your codebase: You can fine-tune advanced tools to your team's specific coding standards, architectural patterns, and even internal libraries. This gives you highly relevant feedback aligned with your organization's unique requirements, enabling customized AI models.

Dynamic Analysis and Behavioral Insights

While static analysis is powerful, some issues only surface during runtime. Dynamic code analysis involves executing code in a controlled environment to observe its behavior. AI enhances this by:

- Predicting performance bottlenecks: AI models analyze runtime behavior and predict areas where code might become inefficient under load, preventing last-minute performance crises.

- Detecting memory leaks and resource mismanagement: By observing memory allocation and deallocation, AI pinpoints leaks notoriously difficult to catch manually.

- Identifying security vulnerabilities at runtime: Beyond static checks, dynamic analysis with AI simulates attacks or edge cases to uncover vulnerabilities that might otherwise go unnoticed.

Intelligent Pattern Detection and Comment Suggestions

- AI's ability to learn from millions of code patterns and best practices is incredibly valuable. It doesn't just identify what's wrong; it suggests what's better.

- Consider a junior developer writing a complex conditional statement. An AI tool might automatically suggest refactoring it into a more readable strategy pattern or highlight redundant checks.

- Moreover, AI can generate descriptive comments for obscure code blocks or suggest improvements to existing documentation, promoting clearer knowledge transfer within your team.

- Tools like Refact.ai even offer autonomous AI agents that generate, optimize, and explain code, significantly reducing manual effort.

This mirrors the rising trend of Generative AI integration in software development.

Advantages of AI-Powered Code Review

Integrating AI into your code review process significantly impacts every stage of your software development lifecycle.

From my experience leading engineering teams, an AI-augmented approach has been transformative, aligning with recent data showing that developers using AI are often more productive and report higher job satisfaction.

Improving Code Quality with AI-Driven Consistency

- Manual code reviews often struggle with consistency. Human reviewers, despite their best intentions, can have off days or apply standards inconsistently across different codebases or team members. AI eliminates this variability.

- AI tools consistently apply coding standards and best practices, leading to uniformly high code quality across all submissions.

- They act as an unyielding gatekeeper, ensuring every line adheres to your defined guidelines.

- This is crucial for large, distributed teams or when onboarding new developers, providing a consistent baseline for quality.

- This consistency is vital for maintaining a healthy, scalable codebase.

Saving Time and Accelerating Review Cycles: Immediate Code Review

Manual code reviews are a significant bottleneck in many development workflows. Developers spend hours reviewing pull requests, providing feedback, and waiting for others. This constant context-switching kills productivity.

AI dramatically shortens review turnaround time by automating routine checks, freeing developers to focus on higher-value tasks. Imagine opening a pull request, and within minutes, an AI provides a comprehensive analysis, highlighting potential issues, suggesting fixes, and summarizing changes. Tools like CodeRabbit deliver instant, line-by-line feedback on pull requests and auto-generated summaries—a clear example of real-time feedback. This means:

- Faster feature delivery: Code moves through the pipeline quicker, enabling more frequent releases and shorter sprint cycles.

- Reduced human bottlenecks: No more waiting for a specific reviewer. The AI is always on, providing immediate feedback.

- More focused human reviews: When human reviewers do step in, they concentrate on complex business logic, architectural implications, and strategic decisions that AI might not fully grasp. This immediate code review capability is a game-changer for CI/CD pipelines.

Reducing Bugs and Technical Debt Proactively

- Bugs and technical debt are the silent killers of software projects. They accrue interest over time until they become unmanageable. AI tools effectively identify these issues early in the development cycle, before they become entrenched and expensive to fix.

- AI code review tools identify bugs and technical debt early by analyzing code patterns and suggesting proactive refactoring.

- They detect complex structural issues, code smells, and deviations from best practices that contribute to technical debt. For example, a tool might flag overly complex code that, while functional, is likely to introduce bugs in future modifications.

- Tools like SonarQube excel at this, continuously analyzing code for issues, including security vulnerabilities and code smells, and providing real-time alerts.

- By integrating with CI/CD pipelines, every code change is evaluated for technical debt before deployment, ensuring your codebase remains healthy and maintainable. This proactive approach is essential for scaling software operations.

Enhancing Security Flaw Detection

- Security is paramount, and vulnerabilities often stem from subtle coding errors. Manual security reviews can be time-consuming and often miss less obvious flaws.

- AI code review tools excel at identifying security vulnerabilities, performing in-depth scans and providing immediate alerts. They leverage extensive databases of known vulnerabilities and apply sophisticated analysis techniques, including Static Application Security Testing (SAST) and taint analysis, to detect potential risks.

- From SQL injection vulnerabilities to cross-site scripting (XSS) issues, AI acts as an early warning system. Snyk, with its AI built on DeepCode technology, focuses specifically on identifying security vulnerabilities in open-source libraries and packages, providing highly accurate scanning

- This is critical for any company, especially those handling sensitive customer data.

AI-based Code Review Platforms for Compliance and Standards Checks

- Consistent coding style and adherence to best practices are crucial for maintainability and collaboration.

- However, manually enforcing these standards can be tedious and lead to friction within teams.

- AI code review tools automatically enforce coding standards by highlighting inconsistencies and deviations, ensuring uniformity and maintainability.

- They act as an objective enforcer, ensuring every piece of code conforms to the team's agreed-upon guidelines. This includes everything from variable naming conventions and code formatting to more complex architectural patterns.

For industries with strict regulatory requirements, AI-based code review platforms for compliance and standards checks can be configured to verify adherence to specific industry standards like OWASP Top 10, CWE Top 25, or even internal company policies, significantly simplifying the audit process.

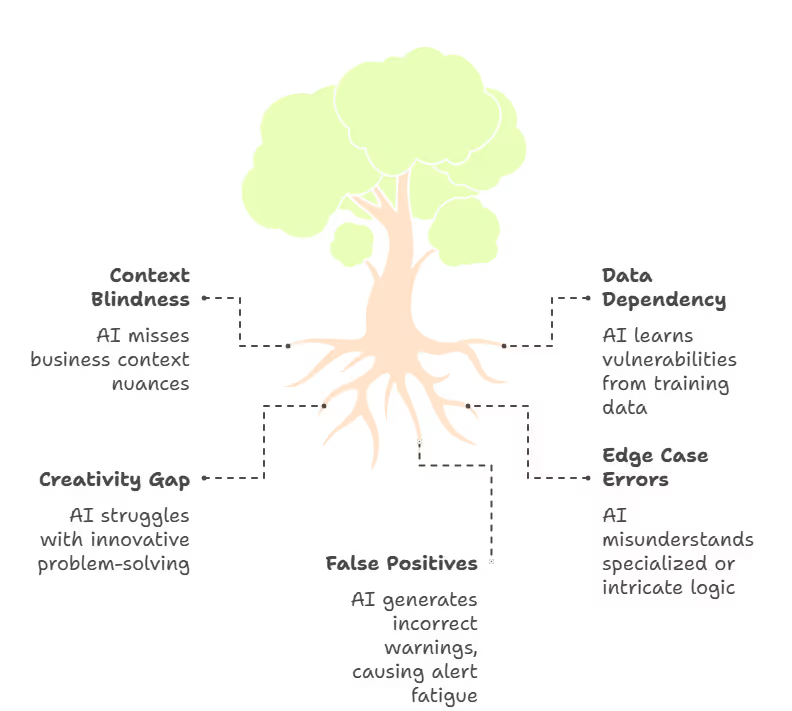

Limitations of AI Code Review: A Realistic Perspective

While AI offers compelling advantages, it's crucial to approach AI code review with a realistic understanding of its current limitations. As an AI engineer, I can attest these tools are powerful, but they aren't a silver bullet.

Recent studies suggest that while AI boosts individual productivity, it can sometimes negatively impact delivery stability if not properly managed, potentially leading to larger batch sizes and requiring more nuanced human intervention.

Context Over Code: The Human Element Remains Key

- AI tools, despite advances in understanding code syntax and patterns, often struggle with the broader business context, architectural nuances, and implicit assumptions within a project.

- They might suggest an "efficient" algorithm that inadvertently conflicts with a specific business rule or a subtle design decision human engineers understand.

- For instance, an AI might not grasp why a seemingly inefficient loop is intentionally used for a specific caching mechanism or why certain data structures are chosen for future scalability rather than immediate performance.

- This "context over code" limitation means human oversight remains vital.

The Training Data Dilemma: Garbage In, Garbage Out

- The quality and breadth of an AI model's training data directly impact its accuracy and reliability.

- If an AI is trained on a vast corpus of publicly available code, it might inadvertently learn and suggest outdated practices, security vulnerabilities, or even licensed code snippets without proper attribution.

- While leading tools actively curate their training data, the risk of inheriting suboptimal patterns or introducing unintended issues remains.

- You must critically evaluate AI-generated suggestions, especially for security-sensitive or performance-critical sections of code.

Creativity Isn't Easily Automated: Beyond Boilerplate

- Coding is as much an art as it is a science, involving creative problem-solving, innovative design, and strategic thinking that AI currently cannot replicate.

- AI excels at generating boilerplate code, identifying common patterns, and suggesting refactorings based on established best practices.

- However, designing complex new algorithms, architecting scalable systems from scratch, or devising novel solutions to unprecedented problems requires human creativity and ingenuity.

- AI is a powerful assistant, but it is not yet the architect.

Addressing Edge Cases and Intricate Logic

- AI code review tools may falter when confronted with highly specialized edge cases or extremely intricate business logic that deviates from common patterns.

- Their statistical models are optimized for frequently occurring scenarios.

- Unique, custom-built logic or highly domain-specific implementations can often be misunderstood by AI, leading to either missed issues (false negatives) or incorrect suggestions (false positives).

- This is particularly true for legacy systems with highly idiosyncratic codebases.

Potential for False Positives and Developer Fatigue

- Despite continuous improvements, AI code analysis tools can still produce false positives, flagging issues that aren't genuine problems.

- While manageable, a high rate of false positives leads to "alert fatigue" among developers, causing them to dismiss valid warnings or lose trust in the tool.

- Effective implementation requires careful tuning of the tool's sensitivity and a feedback loop to refine its understanding of your specific codebase and standards.

AI vs. Manual Code Review: A Collaborative Synergy

The discussion often shifts to "AI vs. Manual Code Review," implying an adversarial relationship. From my perspective, this is a false dichotomy.

The most effective approach is a powerful synergy: AI-enhanced code reviews.

The Strengths of Each Approach

Manual Code Review:

- Deep Contextual Understanding: Human reviewers grasp the "why" behind the code, understanding business requirements, architectural intent, and project-specific nuances.

- Strategic & Architectural Insights: They can identify higher-level design flaws, suggest alternative architectural approaches, and assess the broader impact of changes.

- Complex Problem-Solving: Humans are adept at spotting subtle logic errors, anticipating future issues, and identifying vulnerabilities that depend on complex, multi-component interactions.

- Knowledge Transfer & Mentorship: Code reviews are invaluable opportunities for junior developers to learn from senior peers, fostering a culture of continuous improvement.

AI Code Review (AI-Assisted Code Review):

- Speed and Scalability: AI reviews vast amounts of code in seconds, ideal for large codebases and frequent commits. This allows for immediate code review feedback.

- Consistency and Objectivity: AI applies rules uniformly, eliminating human bias, fatigue, or inconsistency across reviewers.

- Detection of Common Issues: It excels at finding syntax errors, style violations, security vulnerabilities, and common anti-patterns that often consume significant human review time.

- Compliance and Standards Checks: AI is highly effective for automated verification against predefined coding standards and regulatory compliance requirements.

- Proactive Debt Reduction: AI continuously flags technical debt and suggests refactorings, helping maintain code health over time.

The Power of AI-Enhanced Code Reviews

The optimal strategy is a "human-in-the-loop" model, where AI and human reviewers complement each other.

- AI as the First Line of Defense: AI performs the initial, immediate scan, catching the low-hanging fruit (syntax, style, common bugs, obvious security flaws). This pre-review streamlines the process.

- Human Focus on High-Value Tasks: With routine checks handled by AI, human reviewers concentrate on critical aspects: business logic, architectural design, complex security scenarios, and the overall strategic direction of the code.

- AI-Assisted Assessment of Coding Practices: AI tools can be configured to assess adherence to specific coding practices within your team, like comment clarity, data structure efficiency, or consistent application of design patterns. This AI-assisted assessment of coding practices provides objective data for discussions during human reviews and helps maintain overall coding practice quality.

- Continuous Improvement Loop: Combined feedback from AI and human reviews allows for continuous learning. You can fine-tune AI models based on human acceptance or rejection of suggestions, improving their accuracy over time.

This collaborative approach ensures that code is not only technically sound and compliant but also conceptually robust and strategically aligned with business goals.

Best AI Code Review Tools Compared

Choosing the right AI code review tool depends on various factors: your team size, budget, programming languages used, and existing CI/CD infrastructure. Here's a comparison of some prominent players, encompassing both free and paid options, and their strengths. The AI Code Tools Market is experiencing robust growth, with code review tools holding a significant share (around 30% of the market in 2024), reflecting their increasing importance.

Effectiveness of AI in Code Review: Key Considerations

- Language Support: Does it support all the programming languages your team uses (Python, Java, JavaScript, Go, C++, etc.)? Many tools like Refact.ai offer broad language recognition.

- Integration: Does it seamlessly integrate with your version control system (GitHub, GitLab, Bitbucket) and CI/CD pipelines (Jenkins, GitHub Actions, CircleCI)? Tools like CodeRabbit are known for their easy GitHub integration.

- Contextual Understanding: How well does the AI understand your entire codebase context versus just the diff of a pull request? Tools like Greptile claim to provide reviews with full codebase context, leveraging advances in LLMs to understand code intent.

- Customization: Can you customize the rules, integrate private models, or enforce company-specific guidelines? This is a growing trend as companies seek to fine-tune AI for their unique codebases.

- Security Focus: Does it have strong capabilities for identifying security vulnerabilities (SAST, DAST)?

- Pricing Model: Is it per user, per line of code, or project-based? Costs can range significantly.

- False Positive Rate: How accurate are its suggestions, and how much noise (false positives) does it generate? This directly relates to the effectiveness of AI in code review and user adoption.

The effectiveness of AI in code review is best measured not by 100% accuracy, but by its ability to significantly reduce human effort for routine checks and proactively catch issues that might otherwise slip through.

From Theory to Practice: A Step-by-Step Implementation Guide

You understand the "what" and "why." Now, let's get practical. Here's how your team can actually implement AI code review tools, leveraging a trend where IT leaders project over 20% of their budgets will go to AI in 2025.

Define Your Core Problem (and Start Small):

- Action: Before picking a tool, identify your biggest pain point. Is it slow review times? Too many security bugs? Inconsistent coding styles?

- Example: If your startup struggles with security vulnerabilities in its customer-facing web app, prioritize tools strong in SAST, like Snyk. If reviews consistently delay releases, focus on tools that give immediate, lightweight feedback on PRs, like CodeRabbit.

- Benefit: Focusing on one problem first gives you quick wins and shows your team the immediate value.

Choose the Right AI-Based Code Review Tool for Your Team:

- Action: Review the comparison table above. Consider your primary programming languages, existing version control (GitHub, GitLab), and budget.

- Consideration: For smaller teams, explore free tiers or affordable options. For enterprise-grade needs or deep security, consider comprehensive platforms.

- Example: A Go development team should look for tools with robust Go language support and seamless GitHub integration.

- Benefit: Selecting the right tool ensures compatibility and maximizes your investment.

Integrate with Your Existing Workflow (Seamlessly!):

- Action: Connect the chosen AI tool directly to your version control system (e.g., GitHub, GitLab) and your CI/CD pipeline (e.g., Jenkins, GitHub Actions).

- How-to: Most tools provide straightforward setup instructions, typically involving installing an app or a webhook. Configure it to run automatically on every pull request.

- Example: For a team using GitHub, install the CodeRabbit GitHub app. It will automatically comment on your pull requests, providing immediate feedback.

- Benefit: Automated triggers provide immediate code review, catching issues as early as possible, often before human eyes even see the code.

Customize and Fine-Tune for Your Codebase:

- Action: Don't use default settings blindly. Configure the tool's rules, sensitivity, and ignored files/directories.

- How-to: Most tools allow you to define rules in a configuration file (e.g.,

.eslintrcfor JavaScript,.pylintrcfor Python, or tool-specific YAML files). Add your team's specific coding standards, naming conventions, and architectural patterns. This is a crucial step towards building customized AI models for your internal needs. - Example: If your team has a strict rule about not using global variables, add a custom rule to flag them immediately.

- Benefit: Customization reduces false positives and ensures the AI focuses on issues truly relevant to your team, improving the accuracy of AI-powered code analysis.

Educate Your Developers (Build Trust, Not Resentment):

- Action: Conduct workshops or create documentation explaining how the AI tool works, its capabilities, and its limitations. Emphasize that it's an assistant, not a replacement.

- How-to: Encourage developers to review AI suggestions critically. Create a process for them to mark false positives or suggest improvements to the tool's configuration.

- Example: Hold a "Code Review AI Lunch & Learn" session where an engineer demonstrates how the AI caught a common bug and how to interpret its suggestions.

- Benefit: A well-informed team embraces the tool, leading to higher adoption and better results.

Establish a Feedback Loop and Monitor Progress:

- Action: Regularly review the AI's performance. Track metrics like review cycle time, the number of issues caught by AI versus humans, and the rate of accepted AI suggestions.

- How-to: Use your team's existing communication channels (Slack, Jira) to discuss AI feedback. Schedule a monthly review to analyze false positives and areas where the AI can improve.

- Example: If the AI consistently flags an "issue" that your senior engineers deem acceptable, update the tool's configuration to ignore that specific pattern.

- Benefit: Continuous feedback improves the AI's accuracy over time, making it an even more effective tool for AI-assisted assessment of coding practices.

What's Next

The era of purely manual code reviews is quickly giving way to a more efficient, AI-augmented future. As an AI engineer, I've seen firsthand how AI code review tools have become a strategic imperative for any serious development team. They empower us to deliver faster, cleaner, and safer code by automating tedious tasks, enforcing consistent quality, proactively identifying bugs and security vulnerabilities, and significantly reducing technical debt. They free up our most valuable asset, our human engineers, to focus on innovation, complex problem-solving, and the truly creative aspects of software development. Embrace AI in your code review pipeline; it's an investment in your team's productivity, your product's reliability, and your organization's long-term success.