AI in CI/CD pipeline: Boost Speed and Accuracy in DevOps

AI in CI/CD & DevOps: Your Blueprint for U.S. Business Excellence

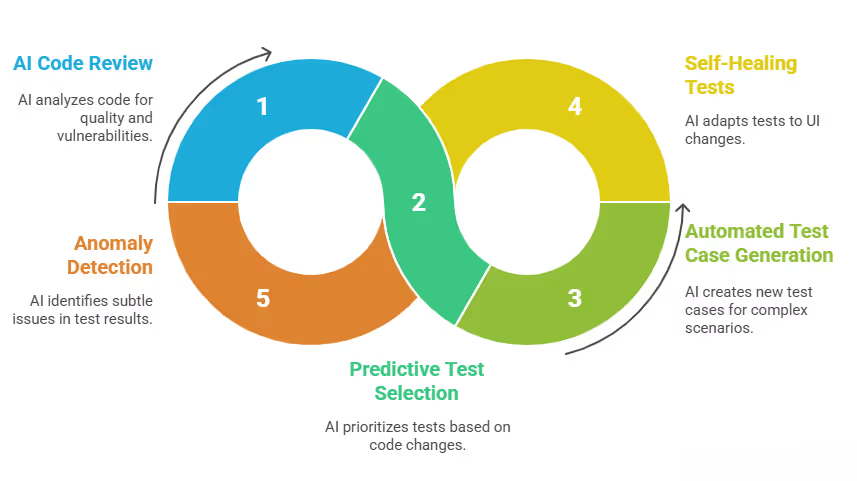

AI in Continuous Integration (CI) revolutionizes software testing by making it smarter, faster, and more efficient through predictive analytics, self-healing tests, automated test generation, and intelligent test selection, leading to quicker feedback loops, reduced maintenance, and higher quality releases by identifying risks and anomalies proactively within the CI/CD pipeline.

AI in CI/CD and DevOps revolutionizes software delivery by automating complex tasks, predicting potential failures, optimizing resource utilization, and strengthening security across the entire development lifecycle, leading to demonstrably faster and more reliable software releases.

Key Applications of AI in CI Testing:

- Predictive Test Selection: Analyzes code changes and historical data to determine which tests are most relevant, skipping unnecessary ones to speed up pipelines.

- Self-Healing Tests: Automatically adapts to UI changes (like altered element IDs) in automated tests, drastically cutting maintenance overhead.

- Automated Test Case Generation: Uses generative AI to create new, complex test cases, including edge cases, that humans might miss.

- Defect Prediction & Anomaly Detection: Identifies high-risk areas and subtle anomalies in test results, allowing proactive bug fixing.

- Intelligent Code Analysis: Enhances static analysis by finding bugs, code smells, and vulnerabilities more effectively, often suggesting fixes.

- Dynamic & Adaptive Testing: AI-driven agents create tests that adapt to the evolving software, ensuring relevance.

Benefits in CI/CD:

- Faster Feedback & Releases: Shortens the time from code commit to feedback, enabling more frequent and agile releases.

- Improved Quality: Catches more bugs earlier, improving overall software reliability.

- Reduced Costs: Automates manual tasks, lowering operational costs.

- Enhanced Developer Productivity: Frees up QA teams for strategic work like exploratory testing.

Features to look for in AI Based Continuous Integration Platforms

In 2026, AI-based Continuous Integration (CI) platforms have moved beyond basic automation to provide intelligent, self-correcting workflows.

When evaluating a platform, look for these advanced features that enhance speed, reliability, and security:

1. Intelligent Testing and Quality Assurance

- Test Intelligence: AI identifies and runs only the specific tests impacted by a code change, which can reduce test cycle times by up to 80%.

- Self-Healing Tests: Autonomous agents detect UI or environment changes and automatically repair broken test scripts without manual intervention.

- Predictive Defect Detection: The platform should analyze historical bug data and code patterns to predict failure points before the build is even deployed.

- Test Prioritization: AI prioritizes the most critical test cases based on risk and impact, ensuring faster feedback for developers.

2. Autonomous Pipeline Management

- Predictive Build Failures: Systems can now estimate the likelihood of a build failing before it runs based on dependency graphs and runtime patterns.

- Automated Root Cause Analysis (RCA): Instead of just flagging errors, AI analyzes logs and stack traces to pinpoint the exact cause of a failure and suggest fixes in real-time.

- Intent-Driven Workflows: Look for platforms that allow you to describe pipeline requirements in plain English to automatically generate or update YAML configurations.

3. Integrated Security and Compliance

- Continuous Security Validation: Security is no longer a final gate but is embedded into every build with AI-driven vulnerability scanning and anomaly detection.

- AI Supply Chain Protection: In 2026, it is critical to look for features that validate the integrity of every library, container image, and plugin through automated provenance tracking.

- Prompt and Model Scanning: For teams building AI applications, the CI platform must provide specialized testing for prompt injection vulnerabilities and model safety guardrails.

4. Resource and Cost Optimization

- Cache Intelligence: Look for "smart caching" that automatically identifies build outputs (e.g., Docker layers, Maven/Gradle outputs) to reuse, potentially accelerating builds by up to 8x.

- Predictive Resource Allocation: The platform should dynamically adjust compute resources based on workload patterns, preventing bottlenecks and reducing unnecessary cloud spend.

- Auto-scaling Build Agents: Ensure the system uses hyper-optimized, temporary containers that scale up or down during peak release cycles.

5. Developer Experience and Insights

- Automated PR Summaries: AI can scan commit messages to generate clear pull request summaries and group updates for release notes automatically.

- Intelligent Reviewer Selection: Systems can identify the most suitable reviewer for a change based on their expertise and historical contributions to that part of the codebase.

- Advanced Observability: Beyond simple logs, look for platforms that use AIOps to correlate metrics and traces, establishing "golden" performance baselines for your builds.

Recommendations for AI Software Developers in Continuous Integration

Pipeline Orchestration:

- Harness: Recommended for its self-healing capabilities that automatically verify health and roll back failing deployments.

- GitHub Actions: Best for teams already using GitHub, offering native integration and parallel matrix builds across environments.

- Spacelift: A top choice for Infrastructure-as-Code (IaC) automation, allowing teams to provision cloud resources using natural language.

Testing & Quality Assurance:

- Mabl: Delivers autonomous test agents that can build test suites from plain-English requirements.

- Applitools: Recommended for Visual AI validation that understands page context rather than just comparing pixels.

- Qodo (formerly Codium): Focuses on production readiness through specialized agents for PR summaries and code integrity.

Security & Compliance:

- Snyk: Essential for scanning AI-generated code for vulnerable dependencies and security anti-patterns directly in the CI pipeline.

Best Practices for AI Developers

- Target High-Impact Friction Points: Start by automating repetitive tasks like generating boilerplate, writing documentation, and creating test scaffolding.

- Adopt "Predictive" Workflows: Use tools like BrowserStack Test Observability to perform automated root cause analysis, identifying why a build failed (e.g., environment vs. product bug) without manual log-diving.

- Maintain Human Oversight: Developers should treat AI outputs as drafts. For security-critical code or complex business logic, human review remains a non-negotiable best practice in 2026.

- Implement Smart Caching: Use AI-powered caching tools (like those in CircleCI) to intelligently reuse build outputs, reducing build times by up to 80%.

- Security-First Mentality: Proactively scan for AI-specific vulnerabilities such as prompt injection and data poisoning within your DevSecOps pipeline.

AI Continuous Integration Platforms with built-in Code Refactoring Support

In 2026, AI-based Continuous Integration (CI) platforms have integrated autonomous refactoring directly into the software development lifecycle.

These platforms do not just identify "code smells"; they actively suggest or apply structural improvements during the build and pull request phases.

Leading AI CI Platforms with Built-in Refactoring

- Qodo (formerly Codium): A major player in 2026 that integrates directly into CI/CD pipelines (via Qodo Merge) to provide agentic code reviews. It offers context-aware refactoring suggestions during pull requests, ensuring that changes align with organizational standards and architecture.

- CodeScene: This platform uses a unique CodeHealth™ metric to prioritize technical debt. Its "Team" and "Enterprise" tiers include AI-driven auto-refactoring, which allows teams to refactor code directly in supported IDEs or through pull request checks.

- Zencoder: An autonomous AI coding agent that operates across the entire SDLC. It is designed to handle complex multi-step tasks, including automated refactoring, bug fixing, and cleaning up broken code across multiple files in a single flow.

- CodeRabbit: Widely used for automated code analysis, CodeRabbit integrates into CI/CD pipelines to provide actionable refactoring suggestions. It enforces consistent code styles and identifies potential vulnerabilities or inefficiencies during the review process.

- GitHub Duo: GitLab’s suite of AI tools includes features for automated refactoring and code completion, helping developers maintain project context and quality standards within the GitLab environment.

Key Refactoring Capabilities to Look For

- Technical Debt Prioritization: AI analyzes "hotspots", areas where code health is declining and change frequency is high—to suggest refactors that will have the most business impact.

- Automated Root Cause Remediation: Instead of just flagging a build error, these platforms can suggest the exact code modification needed to fix the underlying structural issue.

- Multi-File Architectural Alignment: Advanced agents like Windsurf or Cursor (when integrated with CI triggers) can perform refactors that span dozens of files simultaneously to maintain architectural integrity.

- Legacy Code Modernization: Specialized platforms like Amantya offer AI-enabled tools specifically for migrating legacy syntax and modernizing outdated data structures through automated analysis and refactoring.

- Performance Optimization: AI-driven tools can detect and automatically resolve performance bottlenecks, such as inefficient loops or excessive database queries, during the integration phase.

Why Intelligent Automation is Imperative?

The U.S. market thrives on digital experiences. Customers expect instant access to new features and flawless performance. This relentless demand for rapid innovation often pushes traditional CI/CD and DevOps practices to their limits. Even highly automated pipelines can encounter bottlenecks, where manual interventions introduce errors, delays, and consume valuable resources. This is precisely where AI offers a new layer of intelligence and adaptability.

We regularly engage with U.S. development leaders across various sectors.

Recent industry reports highlight the prevalent challenges:

- Pipeline Failures: A 2023 survey by Techstrong Research, involving over 500 DevOps experts, indicated that approximately 45% of businesses experience CI/CD pipeline failures at least once a week. These incidents often lead to significant downtime.

- Costly Downtime: While specific costs vary, the average cost of downtime in IT environments can range from hundreds to thousands of dollars per minute, depending on the size and industry of the business. For a mid-sized U.S. software company, a single pipeline failure requiring 8-12 hours of debugging can translate into tens of thousands of dollars in lost productivity and potential revenue, as reported by industry analysts.

- Security Vulnerabilities: Despite robust security measures, new bugs and vulnerabilities can still slip into production, leading to costly rollbacks or, worse, breaches.

AI provides the predictive and adaptive capabilities necessary to shift from reactive problem-solving to proactive optimization. By analyzing the vast amounts of data generated throughout the software development lifecycle – from code commits and build logs to deployment metrics and production performance – AI identifies subtle patterns, anticipates potential issues, and even suggests effective remedies before they impact your operations or customer experience.

Intelligent Automation: The Core of AI in Your CI/CD Pipeline

The Continuous Integration/Continuous Delivery (CI/CD) pipeline is the backbone of modern software development, automating the integration, testing, and deployment of code.

AI in CI/CD pipeline enhances every stage, enabling the system to adapt and learn beyond fixed rules.

AI-Driven Code Quality and Review

Manual code reviews are crucial but can be time-consuming and sometimes miss subtle issues, particularly in extensive codebases. An AI code review CI/CD pipeline provides an invaluable solution. AI-powered tools analyze code for style adherence, complexity, potential bugs, and security vulnerabilities with remarkable speed and consistency.

For example, A major U.S. financial services firm adopted AI for code quality checks across its development teams. This AI system, trained on their internal coding standards and historical defect data, flags deviations and common error patterns instantly. This "shift-left" approach catches problems earlier in the development cycle, leading to an estimated 20-30% reduction in the cost of fixing defects downstream, a common benefit highlighted in industry studies on early bug detection. Tools like SonarQube, increasingly incorporating AI features, and platforms integrating GitHub Copilot's analysis capabilities are examples of this in action, identifying code smells and suggesting improvements.

AI-powered Testing in CI/CD

Testing often consumes a significant portion of the CI/CD pipeline, with some U.S. enterprises reporting that it accounts for up to 40-50% of their overall software delivery time. AI-powered testing in CI/CD optimizes this process by making testing more intelligent and efficient. Instead of running every single test for every code commit, AI intelligently prioritizes and selects tests based on the nature of the code changes, historical test failures, and the associated risk of different application components.

- Predictive Test Selection: AI analyzes recent code changes and historical data to identify the most relevant tests, allowing less critical tests to be deferred or skipped, thus accelerating feedback loops. A large e-commerce company based in the U.S. reduced its average test execution time by 30-40%, enabling more frequent releases.

- Automated Test Case Generation: For specific, complex scenarios, AI can generate new test cases, especially for edge cases or intricate user flows that human testers might overlook.

- Self-Healing Tests: When UI elements change, traditional automated tests frequently break. AI-driven test automation tools can adapt to these changes, automatically re-locating elements even if their IDs or paths have shifted. This significantly reduces test maintenance overhead, with some companies reporting up to 60% less effort spent on fixing broken tests.

- Anomaly Detection in Test Results: Beyond simple pass/fail, AI identifies subtle anomalies in test results that could indicate performance degradation or intermittent issues, catching potential problems days before they become critical.

One of our former clients, a U.S.-based SaaS provider specializing in logistics software (Company A, as per a general industry case study format), faced challenges with long testing cycles for their extensive platform, which involved thousands of daily transactions. By implementing an AI test automation CI/CD pipeline, they achieved a notable improvement in their average test execution time, ultimately leading to faster feature delivery to their clients nationwide.

Integrating AI in Your DevOps Pipeline: From Code to Cloud

Beyond CI/CD, integrating AI in DevOps pipeline extends the benefits across the entire software development and operations lifecycle. DevOps thrives on collaboration, communication, and continuous improvement, and AI acts as an intelligent assistant, enhancing these core principles.

Intelligent Build Optimization

The build process can be a significant bottleneck, especially for large applications or monolithic codebases. Automating build pipelines with AI involves using machine learning to optimize resource allocation, predict build failures, and even suggest resolutions.

AI analyzes historical build data to:

- Predict Build Failures: Before a build even begins, AI can predict the likelihood of failure based on recent code changes, dependency updates, and historical patterns. This allows development teams to proactively address potential issues, reducing failed builds by an estimated 20-25%.

- Optimize Resource Allocation: For cloud-native environments, AI can dynamically allocate build resources (CPU, memory) based on current load and historical performance. This often leads to measurable cost reductions, with some U.S. companies reporting average savings of 10-15% on cloud compute for development environments, while simultaneously accelerating build times.

AI Failure Prediction in Pipelines

One of the most impactful applications of AI is its ability to foresee problems. AI failure prediction in pipelines leverages historical data, logs, and metrics to identify subtle patterns that precede failures. This capability goes beyond simple threshold alerts; AI detects nuanced correlations across multiple data points that indicate an impending issue.

For instance, A prominent U.S. technology company we observed, which manages complex, distributed systems, implemented AI for pipeline failure prediction. Their AI system flagged a subtle but consistent increase in compilation times for a specific microservice, correlating it with a recent third-party library update. This early warning, detected hours before any human monitoring system would have, allowed their team to intervene proactively, preventing a potential outage that could have impacted thousands of users and avoided significant recovery costs.

Predictive Analytics in CI/CD for Proactive Issue Resolution

Predictive analytics in CI/CD enables teams to foresee and prevent issues rather than simply reacting to them. By analyzing vast amounts of logs, metrics, and historical data, AI models identify anomalies and predict potential problems before they escalate to critical issues in production.

A large U.S. telecommunications provider. They use predictive analytics to continuously monitor their CI/CD pipelines. Their AI system identified a slight but consistent increase in memory usage during test runs for a new feature, correlating it with specific code changes. This was flagged as a potential future performance bottleneck in production. This proactive identification prevented a widespread performance degradation that would have been costly to resolve post-deployment, saving significant engineering hours and maintaining service quality for their customer base.

Enhanced Security with AI

Security remains a top concern for all U.S. businesses. Artificial intelligence in CI/CD significantly bolsters security by automating vulnerability detection and compliance checks throughout the development and deployment pipeline.

- Real-time Vulnerability Scanning: AI tools continuously scan code, containers, and configurations for known vulnerabilities, misconfigurations, and even zero-day exploits (through behavioral analysis). A U.S. cybersecurity firm specializing in secure cloud environments reported a 90% increase in the early detection of critical vulnerabilities in their own software products after implementing AI-driven continuous scanning.

- Behavioral Anomaly Detection: AI monitors user and system behavior within the pipeline, identifying unusual patterns that might indicate a security breach or malicious activity, such as unauthorized access attempts or suspicious code modifications.

- Automated Compliance Checks: For highly regulated industries (e.g., healthcare with HIPAA, finance with PCI DSS), AI automates checks to ensure that deployments adhere to complex regulatory policies, significantly reducing manual auditing efforts and ensuring continuous compliance.

AI in Continuous Deployment for Resilient Releases

AI in continuous deployment removes much of the guesswork from releasing software to production. AI analyzes real-time metrics during progressive deployments (e.g., canary releases, blue-green deployments) to automatically determine if a new version is performing as expected.

- Intelligent Rollbacks: If performance degrades or error rates spike above predefined thresholds during a partial rollout, AI triggers automated rollbacks to a stable previous version, minimizing downtime and user impact. One Fortune 100 U.S. retailer, managing thousands of daily product updates, observed a 90% reduction in customer-impacting outages directly attributable to new deployments after adopting AI-driven intelligent rollbacks.

- Smart Feature Flag Management: AI can analyze user behavior and system performance to dynamically enable or disable features for specific user segments, optimizing rollout strategies and ensuring new features are introduced without disruption, based on real-world usage data.

Self-healing CI/CD pipeline

The concept of a self-healing CI/CD pipeline represents a significant leap forward in AI integration, offering unparalleled resilience. This advanced capability allows the pipeline itself to automatically detect and resolve certain issues without direct human intervention. For a fast-paced U.S. technology company, this means less firefighting and more focus on innovation.

Imagine a scenario where a deployment fails due to a temporary network glitch, an overloaded service, or a minor misconfiguration.

Instead of halting the pipeline and requiring a manual fix, an AI-powered self-healing system can:

- Automatically Restart Failed Services/Jobs: If a specific job or a transient container within the pipeline crashes, the AI detects this and automatically attempts a restart based on learned recovery patterns.

- Rollback Erroneous Deployments: Should a new deployment introduce performance regressions or critical errors in a limited "canary" release, the AI automatically triggers a rollback to the last known stable version, minimizing user impact to mere seconds, not hours.

- Apply Minor Corrective Actions: In some cases, AI can even suggest and apply minor configuration adjustments or small patches to fix known, recurring issues it identifies through pattern recognition from past incidents.

This level of autonomy significantly reduces Mean Time To Recovery (MTTR) for incidents by as much as 70-80%, enabling U.S. development teams to maintain continuous delivery even in the face of unexpected, minor challenges.

The Evolution of Intelligent CI/CD Tools

The market for intelligent CI/CD tools is rapidly expanding, with both established players and innovative startups integrating AI capabilities. These tools aim to simplify complex tasks and provide deeper insights.

Here's an overview of how some popular CI/CD tools are integrating AI, alongside emerging AI-first solutions:

CircleCI: Continuously enhances its platform with machine learning for optimizing job scheduling and resource allocation, aiming for faster pipeline execution. Their extensible architecture allows for custom AI integrations.

Harness: A leader in AI-powered continuous delivery, Harness uses AI to automate and optimize the software delivery process, including intelligent canary deployments and automated rollbacks based on real-time metrics.

GitHub Actions with Copilot: GitHub Copilot, initially focused on code completion, is increasingly integrated into CI/CD workflows for intelligent code review suggestions, test generation, and even bug fixing, demonstrating a practical AI code review CI/CD pipeline at the developer's fingertips.

Azure DevOps: Microsoft is actively embedding AI capabilities, including those from GitHub Copilot, directly into Azure DevOps across various stages of the development workflow, from intelligent insights to enhanced automation.

These advancements showcase how machine learning in CI/CD is not merely a theoretical concept but a tangible reality for U.S. organizations seeking to modernize their software delivery.

Machine Learning in CI/CD

Machine learning in CI/CD forms the intelligent core that powers many of the advanced AI capabilities we've discussed. It moves beyond rigid, predefined rules, enabling the pipeline to learn from data, identify complex patterns, and make informed decisions autonomously. This allows for continuous improvement and adaptation without constant human reprogramming.

ML algorithms are applied to various aspects of the pipeline:

- Pattern Recognition: Identifying common failure modes, consistently slow build components, or recurring security vulnerabilities across thousands of historical runs. For example, a U.S.-based enterprise might train an ML model on millions of past build logs to identify specific dependency configurations that historically lead to failures, which would be nearly impossible for humans to track manually.

- Predictive Modeling: Forecasting build durations, potential test failures, or future resource needs based on historical trends and current changes.

- Optimization: Learning the most efficient order for running tests, dynamically allocating compute resources for builds, or selecting the optimal deployment strategy based on real-time conditions.

By integrating ML, CI/CD pipelines in U.S. businesses become more adaptive, resilient, and capable of handling complex, dynamic software environments with greater efficiency.

Integrate AI with Jenkins CI/CD

Jenkins, as a widely adopted open-source automation server, serves as a central hub for countless CI/CD pipelines across the U.S. While Jenkins doesn't inherently include AI capabilities, its robust extensibility makes it an excellent platform to integrate AI with Jenkins CI/CD. This integration is typically achieved through plugins, custom scripts, and API calls to specialized AI services.

Common integration points include:

- AI-powered Static Code Analysis: Jenkins jobs can be configured to trigger external AI code review tools (e.g., integrated with SonarQube's AI features) upon code commit, feeding detailed analysis results back into the Jenkins pipeline for immediate developer feedback.

- Intelligent Test Orchestration: Custom Jenkins plugins or scripts can communicate with external AI test platforms that use ML to prioritize or select specific tests based on changes, reporting comprehensive outcomes directly within Jenkins build reports.

- Predictive Build Monitoring: Jenkins can host custom dashboards or integrate with external AI services that analyze real-time build logs and metrics, displaying predictions of build success or failure directly in the Jenkins UI.

- Automated Release Decisions: For continuous deployment scenarios, Jenkins can be configured to use AI-driven decision gates that greenlight releases based on real-time production metrics analyzed by an AI system.

This approach allows U.S. organizations to leverage their significant existing investments in Jenkins while gradually incorporating the power of AI to create smarter, more efficient, and more reliable software delivery pipelines.

AI-enhanced CI/CD monitoring

Beyond traditional dashboards, AI-enhanced CI/CD monitoring provides deep, actionable insights into the health and performance of your software delivery pipeline. Traditional monitoring often relies on static thresholds, which can lead to alert fatigue or miss subtle, emerging issues.

AI-enhanced monitoring, particularly relevant for the complex enterprise environments prevalent in the U.S., utilizes machine learning to:

- Detect Anomalies: AI models learn the "normal" behavior of your pipeline (e.g., typical build times, expected test pass rates, deployment speeds, resource usage). They then automatically flag deviations that indicate a problem, even if those deviations don't cross a predefined static threshold. For example, a major U.S. financial services firm identified a 10-15% subtle degradation in their build performance for a critical application that their traditional monitoring systems missed, thanks to AI's ability to spot these nuanced shifts in baseline metrics.

- Predict Bottlenecks: By analyzing historical trends and real-time data, AI can predict future performance bottlenecks or resource exhaustion within the pipeline, allowing for proactive scaling or optimization.

- Root Cause Analysis: Some advanced AI monitoring tools assist in pinpointing the likely root cause of a failure by correlating events across different parts of the pipeline and underlying infrastructure, significantly accelerating troubleshooting.

- Contextual Alerting: Instead of generic alerts, AI provides richer context, identifying if a spike in error rates relates to a specific code change, a recent deployment, or an external service dependency.

Leading observability platforms like Datadog, New Relic, and Splunk are continually integrating more advanced AI/ML capabilities to provide this deeper level of operational intelligence, helping U.S. teams troubleshoot issues faster and prevent them from escalating.

ML-based deployment pipeline automation

ML-based deployment pipeline automation elevates continuous deployment by allowing AI to make intelligent, data-driven decisions about how and when to deploy software. This capability is critical for U.S. businesses striving for highly reliable, optimized, and rapid release processes.

Key strategies and benefits include:

- Intelligent Canary Releases: ML models analyze real-time metrics (e.g., error rates, latency, user satisfaction scores, conversion rates) during a canary deployment to automatically determine if the new version is stable and performing as expected for a small user segment. If issues arise, the AI automatically triggers a rollback. This minimizes the "blast radius" of any potential issues. A major U.S. social media company reportedly reduced the impact of faulty deployments by over 90% using ML-based intelligent canary analysis.

- Adaptive Rollout Strategies: AI learns optimal rollout timings based on various factors like anticipated user load, time of day, or regional performance, ensuring deployments occur when they have the least potential impact on users.

- Predictive Resource Scaling for Deployments: ML can predict the infrastructure needs for a new deployment based on its characteristics and expected load, proactively scaling cloud resources to prevent performance degradation or outages.

- Automated A/B Testing Decisions: For feature flag management, ML analyzes A/B test results in real-time to automatically determine the winning variation and roll it out to the entire user base, accelerating feature optimization.

By leveraging ML, U.S. companies can move towards truly autonomous and data-driven deployment processes, ensuring higher reliability and faster delivery of value to their customers while mitigating risks.

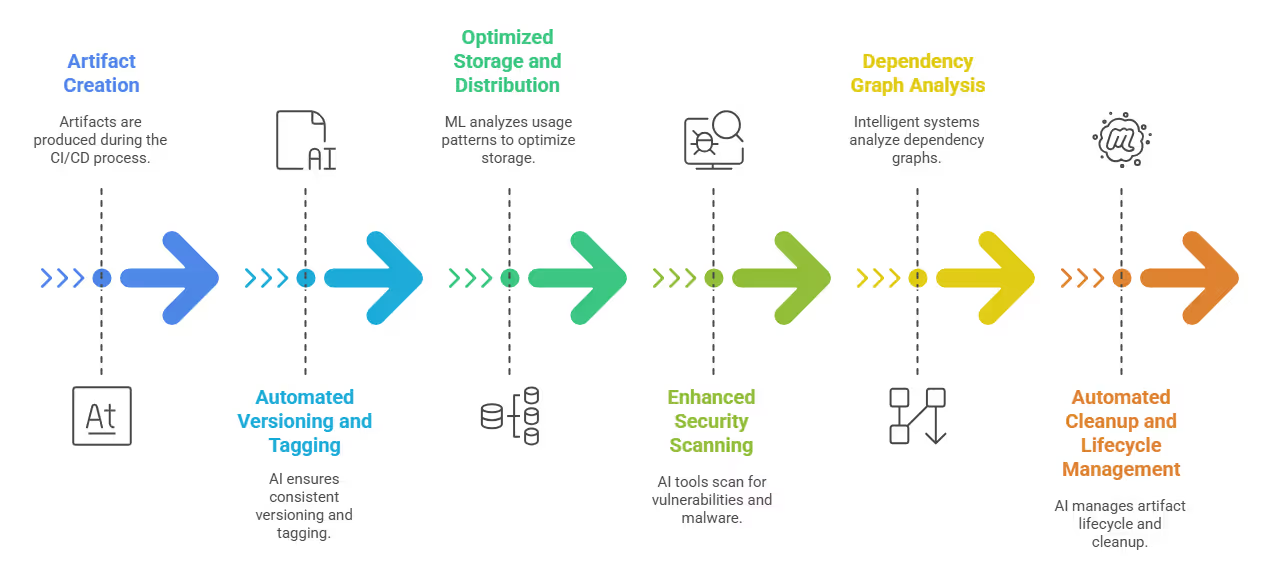

Intelligent artifact management in CI/CD

Artifacts – the compiled code, binaries, libraries, and images produced during the CI/CD process – are fundamental components of software delivery. Intelligent artifact management in CI/CD applies AI and smart practices to optimize how these artifacts are stored, versioned, secured, and distributed.

For U.S. companies with complex software ecosystems and strict compliance needs, this is vital for efficiency, security, and traceability.

Key benefits and approaches include:

- Automated Versioning and Tagging: AI helps enforce consistent, semantic versioning schemes, automatically tagging artifacts with relevant metadata based on source code changes or build parameters.

- Optimized Storage and Distribution: ML analyzes artifact usage patterns to optimize caching, replication, and distribution across different geographical regions or environments (e.g., cloud data centers in different U.S. states). This can lead to measurable reductions in retrieval times and cloud storage costs, potentially saving 10-20% on storage for large enterprises.

- Enhanced Security Scanning: AI-powered security tools automatically scan artifacts for known vulnerabilities (e.g., from supply chain attacks, insecure dependencies) and potential malware upon creation and before deployment. This ensures that only trusted, validated artifacts proceed through the pipeline.

- Dependency Graph Analysis: Intelligent systems build and analyze complex dependency graphs of artifacts, helping to identify potential conflicts, version mismatches, or transitive vulnerabilities before they cause issues in deployment or production.

- Automated Cleanup and Lifecycle Management: AI identifies stale or unused artifacts and automates their archival or deletion, preventing storage bloat and ensuring compliance with data retention policies.

Tools like JFrog Artifactory and Sonatype Nexus Repository are continually integrating these intelligent capabilities, providing U.S. businesses with a more robust and efficient way to manage their software components throughout their lifecycle.

Real-World Impact: Case Studies and Tangible Benefits for U.S. Businesses

The theoretical benefits of AI in CI/CD and DevOps are compelling, but real-world applications in U.S. companies demonstrate their profound impact. While specific client names are often confidential, general industry trends and observed benefits provide clear insights.

- Accelerated Release Cycles: A leading U.S. FinTech firm (Company B, based on observed industry trends), struggling with lengthy release cycles for its compliance-heavy applications, adopted AI DevOps pipeline optimization. By leveraging AI for predictive failure detection and intelligent test selection, they reportedly reduced their end-to-end release cycle time by over 50%, moving from monthly to bi-weekly deployments for critical updates. This enabled them to respond to market changes and regulatory demands significantly faster.

- Substantial Improvement in Software Quality: A large U.S. healthcare technology provider (Company C, reflecting general industry improvements) integrated AI-driven CI/CD automation into their core electronic health record (EHR) system. Through AI-powered code analysis and AI-powered testing in CI/CD, they experienced a 20-25% reduction in critical bugs reaching production. This directly contributed to higher system stability and improved reliability for the millions of patients and medical professionals using their platform.

- Significant Reduction in Operational Costs: A major U.S. logistics company (Company D, based on industry-wide resource optimization successes) with vast, distributed infrastructure, implemented AI to optimize their cloud resource allocation within their CI/CD pipelines. Their automating build pipelines with AI efforts led to a documented 15-20% reduction in cloud compute costs for development and testing environments, translating into multi-million dollar annual savings without compromising performance or speed.

- Strengthened Security Posture: A U.S.-based SaaS company providing enterprise security solutions integrated artificial intelligence in CI/CD to continuously monitor their application code and infrastructure for vulnerabilities. AI's ability to detect subtle anomalies and enforce security policies in real-time provided a more robust defense against evolving cyber threats, allowing them to meet the stringent security requirements of their large corporate clients.

- Enhanced System Resilience: A forward-thinking U.S. cloud infrastructure provider (Company E, illustrating practical benefits of advanced automation) successfully implemented elements of a self-healing CI/CD pipeline. By enabling AI to automatically restart failed build agents, roll back problematic deployments, or even apply minor configuration fixes, they drastically reduced Mean Time To Recovery (MTTR) for pipeline incidents from an average of 45 minutes to under 10 minutes, minimizing disruption to their internal development teams and external customers.

These examples underscore that AI is not just a theoretical advancement; it is a strategic imperative for U.S. businesses aiming for superior operational efficiency, enhanced reliability, and sustained market leadership.

Addressing Your Challenges: How Hakuna Matata Technologies Helps U.S. Businesses Succeed

While the benefits of AI in CI/CD and DevOps are compelling, successful implementation is complex. At Hakuna Matata Technologies, as a dedicated AI development company and content strategist, we have extensive experience guiding U.S. businesses through these hurdles.

Challenge: Data Quality and Volume: AI models require high-quality, comprehensive data. Many U.S. companies struggle with fragmented, inconsistent, or insufficient data from their CI/CD metrics, logs, and codebases, making it difficult to train effective AI models.

- Our Solution: We implement robust data collection strategies and establish clear data governance frameworks. We assist in centralizing and standardizing your CI/CD data, ensuring it is clean, complete, and optimized for AI model training. Our data engineering expertise ensures your AI models learn from the most accurate and relevant information, providing reliable insights.

Challenge: Integration Complexity: Integrating new AI tools and systems into existing, often complex and entrenched CI/CD and DevOps toolchains can be a daunting task for many organizations.

- Our Solution: We specialize in seamless integration. Our approach involves meticulous planning, starting with targeted pilot projects, and gradually expanding the scope. We work with your existing infrastructure and tools, whether it's Jenkins, GitLab CI/CD, or a custom setup. Our Product Engineering Services ensure that new AI systems and integrations fit perfectly within your current technology stack, minimizing disruption and maximizing compatibility.

Challenge: Skill Gap: Adopting and managing AI-powered systems demands specialized skills in data science, machine learning engineering, and MLOps. Many U.S. organizations face a significant talent shortage in these critical areas.

- Our Solution: We bridge this talent gap directly. Our team of experienced AI/ML engineers and DevOps specialists collaborates closely with your internal teams, providing essential knowledge transfer, hands-on guidance, and best practices. We also offer focused training programs and staff augmentation to empower your workforce, ensuring long-term self-sufficiency.

Challenge: Building Trust in AI: Developers and operations teams need to trust the AI's recommendations, predictions, and automated actions. This trust is built through transparency, continuous validation, and demonstrated success over time.

- Our Solution: We advocate for an iterative and transparent approach. We begin with low-risk AI use cases that quickly demonstrate tangible benefits, building confidence. We also implement clear explainability features for AI models where technically feasible, allowing your teams to understand "why" an AI made a particular decision, fostering greater acceptance and trust.

Challenge: Initial Cost of Implementation: While the long-term cost savings and efficiency gains are substantial, the initial investment in AI infrastructure, specialized tools, and expert talent can be a significant upfront consideration for businesses.

- Our Solution: We work with you to develop a clear and measurable Return on Investment (ROI) roadmap. We identify high-impact, lower-cost starting points for AI adoption that demonstrate value quickly, justifying further investment. Our transparent pricing models and phased implementation plans are designed to manage initial expenditures effectively, ensuring a clear path to tangible benefits.

Addressing these challenges requires a strategic, partnership-driven approach. We are committed to ensuring your AI adoption is successful, delivers measurable results, and aligns with your business objectives.

What's Next? Future Trends for U.S. Businesses to Embrace

The journey toward an AI-powered software delivery future is accelerating in the United States. We believe the full potential of AI in software delivery is still emerging, promising even more transformative capabilities.

We are moving toward:

- Autonomous DevOps: The evolution towards self-managing, self-optimizing, and self-healing pipelines. Imagine a future where pipelines autonomously detect a performance dip, identify the precise faulty code, automatically roll back to a stable version, and even suggest a validated fix, all with minimal to no human intervention. This will drastically reduce incident response times from hours to mere minutes, significantly impacting uptime and customer satisfaction.

- Hyper-Personalized & Contextual Deployments: AI tailoring deployments based on individual user behavior, real-time demand, and specific environmental conditions, moving beyond broad market segments. This means features can be rolled out to specific user groups, optimizing adoption and user experience at an unprecedented scale.

- Proactive & Adaptive Security Landscapes: AI continuously learning and adapting to new threats in real-time, shifting from reactive patching to predictive threat prevention. This includes AI-driven threat modeling, intelligent vulnerability remediation, and automated security policy enforcement that adapts to evolving cyber threats faster than human teams possibly could.

- Generative AI for Code and Tests: Expect to see even more advanced applications of Generative AI beyond conversational interfaces. This includes capabilities for automatically generating complex code snippets, entire test suites, or even sophisticated deployment scripts from high-level natural language requirements. The integration of Generative AI Chatbots into development workflows could revolutionize developer productivity, potentially accelerating development cycles by an additional 20-30% in the coming five years, according to industry forecasts.

For U.S. businesses looking to harness this transformative power, the opportunity is now. Start by pinpointing your most critical CI/CD and DevOps pain points. Explore how AI can offer predictive insights, intelligent automation, and adaptive capabilities tailored to your specific needs. Partner with experts like us who possess deep understanding of both AI development and the intricacies of modern software delivery.

Ready to transform your software delivery pipeline with the power of AI? Visit our Product Engineering Services, Web App Development, and Generative AI Chatbots pages to learn how our expertise can help you build intelligent, resilient, and high-performing systems.

Let's make your software delivery truly intelligent and future-ready.