Generative AI Implementation in Legacy Systems

What Is Generative AI Implementation in Legacy Systems?

Generative AI (GenAI) implementation in legacy systems involves embedding AI into older IT platforms (like mainframes, ERPs) to automate tasks, modernize code, enhance efficiency, improve decision-making, and extend system lifespan without costly "rip and replace" overhauls, using methods like code refactoring, API integration, and natural language processing for data analysis, enabling smarter, more resilient, and future-ready digital transformation.

How GenAI Modernizes Legacy Systems?

- Code Understanding & Refactoring: Analyzes complex, old codebases to explain logic, generate documentation, find flaws, and automate updates, significantly reducing the time needed for new developers to understand systems.

- Automation & Efficiency: Automates repetitive tasks, identifies bottlenecks, and streamlines processes, freeing up resources and improving operational speed.

- Data & Insights: Extracts actionable insights from vast legacy data, enabling better analytics, personalized customer experiences, and informed decision-making.

- Cloud Migration: Assists in analyzing system suitability for the cloud and generating code for migration, making the transition smoother and faster.

- Security & Compliance: Identifies security vulnerabilities (like weak encryption) and helps generate fixes or reports to meet current regulatory standards.

Implementation Strategies & Considerations

- Incremental Modernization: Layer GenAI into existing systems using APIs or middleware rather than attempting a full rewrite, preserving core business logic.

- Human-in-the-Loop: Keep human oversight for critical decision-making, using AI for analysis and recommendations.

- Context is Key: Provide Large Language Models (LLMs) with crucial context (like dependency graphs) for accurate understanding, often using parsers and graph analysis.

- AI Agents: Utilize single, hierarchical, or peer-to-peer AI agents for focused tasks or collaborative problem-solving at scale.

Key Benefits

- Extended Lifespan: Extends the life of valuable legacy investments.

- Cost Reduction: Lowers modernization costs by reducing manual effort and project timelines.

- Innovation: Accelerates digital transformation and competitiveness.

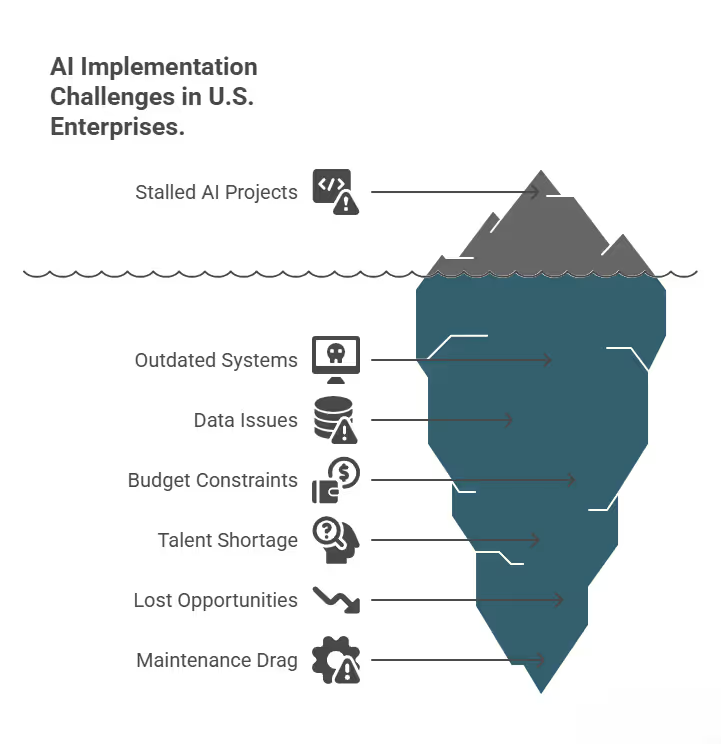

Why U.S. Enterprises Are Adopting Generative AI for Legacy Systems

The adoption of generative AI in legacy environments is growing rapidly in the U.S., driven by a combination of economic, regulatory, and competitive pressures. For CIOs and IT leaders, the business case centers around four major drivers:

- Cost Savings: Replacing legacy systems like mainframes or ERP platforms can cost millions of dollars and take years. Generative AI reduces costs by extending the system’s life and automating manual processes. For example, banks use AI to automate compliance checks instead of investing in new core banking systems.

- Innovation: U.S. enterprises are under pressure to deliver innovative services faster. Generative AI enables intelligent document handling, predictive analytics, and customer-facing virtual assistants—all layered on top of existing legacy IT without massive disruption.

- Compliance & Risk Management: Highly regulated industries such as healthcare and finance benefit from generative AI’s ability to improve auditing, reporting, and error detection. In healthcare, AI-assisted EHR (Electronic Health Records) tools help meet HIPAA requirements while reducing physician workload.

- Digital Transformation: Digital-first competitors are disrupting traditional industries. Generative AI provides U.S. manufacturers, banks, and hospitals with tools to modernize operations while staying competitive in the era of Industry 4.0 and AI-driven care.

Step-by-Step Generative AI Implementation in Legacy Systems

Step 1: Audit and Landscape Baseline

Before you write a single line of AI code, you must evaluate your "readiness." Moving too fast without an audit leads to "garbage in, garbage out" results.

Assess Technical Debt

Identify systems where hard-to-maintain code blocks your progress. In the United States, many banking and insurance giants still rely on COBOL or monolithic Java environments.

- Action: Catalog these systems.

- Risk: High technical debt increases the cost of AI integration by up to 40%.

Map Your Data Inventory

GenAI thrives on data, but legacy data is often messy. You must map all data sources, including siloes and unstructured formats (PDFs, emails, logs).

- Focus: Ensure the AI can "read" these sources without manual intervention.

Feasibility Check

Determine if your current runtime, whether on-premise or in a cloud environment, can handle the computational load. GenAI requires high-speed connectivity and significant memory.

- Fact: In 2025, firms in states like Virginia and Texas are increasingly moving these workloads to "edge" cloud locations to reduce latency.

Step 2: Define High-Impact Use Cases

The biggest mistake US enterprises make is trying to "boil the ocean." Successful AI integration starts small and scales based on ROI.

Focus on Specific Problems

Don't build a general "AI Assistant." Instead, solve a specific pain point like:

- Predictive Maintenance: For American manufacturers in the Rust Belt.

- Fraud Detection: For New York-based financial institutions.

- Customer Support: Automating claims processing for healthcare providers in Florida.

Set Clear Success Metrics

Establish Key Performance Indicators (KPIs) immediately.

- Target: Aim for a 20% reduction in operational costs or a 15% gain in employee productivity within the first six months.

Step 3: Lay the Infrastructure Foundation

You do not need to delete your legacy core to use AI. Instead, modernize the "connectivity layer."

Adopt an API-First Approach

Wrap legacy components in modern APIs or Microservices. This allows GenAI models to "talk" to your old database without breaking it.

- Benefit: This creates a "buffer zone" that protects your core systems from AI-related traffic spikes.

Use Middleware Bridges

Middleware acts as a translator. It takes data from an old IBM mainframe and converts it into a format that a modern LLM (Large Language Model) can understand.

Upgrade Data Pipelines

Enable real-time data flows. Use tools like Snowflake or Databricks to unify data from on-premises servers and modern cloud storage.

How Do You Scale for Growth?

AI needs room to grow. A U.S. e-commerce client planned for 10x data growth, avoiding bottlenecks.

Think about:

This aligns with AI-ready infrastructure.

Your AI Journey Starts Now

Building an AI-ready enterprise architecture isn’t just a tech project, it’s a game-changer for U.S. CIOs. By modernizing legacy systems, strengthening data architecture, securing AI workloads, and building skilled teams, you’ll turn AI from a buzzword into a revenue driver.

I’ve seen it work for U.S. clients across industries, from retail to healthcare.

Start small, prove value, and scale smart to lead your enterprise AI transformation.

Need help getting started?