Legacy System Modernization: What Actually Works After the Hype

What Is Legacy System Modernization?

Legacy system modernization is the process of updating, replacing, or migrating outdated software systems to modern technologies that improve scalability, security, and integration.

For U.S. enterprises, it ensures business continuity, supports compliance, reduces maintenance costs, and enables digital transformation by leveraging cloud, microservices, and automation.

What Are Legacy Systems?

Legacy systems are older technologies that have become outdated but are still critical to daily business operations. They can be mainframe computers, decades-old software applications, or even custom-built systems that are no longer supported by their original vendors. Often, they run on programming languages that are not widely used today, making them difficult to maintain and find skilled developers for. While these systems might be reliable, their age makes them slow, inflexible, and increasingly incompatible with newer business tools and processes.

Common Challenges in Legacy Database Modernization

- High Costs: Maintaining legacy systems can be incredibly expensive. The specialized hardware and software require costly support contracts, and the scarcity of developers with the right skills often drives up labor costs for bug fixes and minor updates.

- Security Vulnerabilities: Older systems often lack modern security features and are more susceptible to cyberattacks. They don't receive regular security patches, leaving them vulnerable to new threats and non-compliant with current data protection regulations.

- Operational Inefficiency: Legacy systems are not designed for today's fast-paced, interconnected business environment. They often have slow processing times, clunky user interfaces, and cannot easily integrate with new cloud-based services, leading to manual workarounds and reduced productivity.

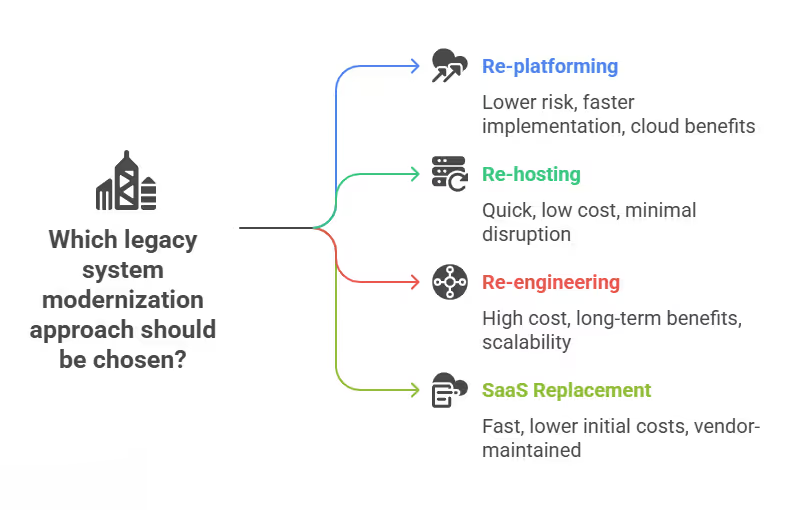

Legacy System Modernization Approaches

Legacy system modernization is the process of updating or replacing outdated software to improve efficiency, security, and scalability. This is a critical process for businesses as it helps them remain competitive in a rapidly evolving digital landscape. There are several approaches to modernization, each with its own advantages and disadvantages. The best approach depends on the specific needs of the business, including budget, timeline, and risk tolerance.

Here are some of the top approaches to legacy system modernization:

Re-platforming: This approach involves moving an application to a new platform without changing its core code, such as migrating a mainframe application to a cloud-based server. This is less risky than a complete rewrite and allows businesses to take advantage of modern infrastructure. It can improve performance and reduce operational costs. However, it may not address all underlying architectural issues, and the application may not fully leverage the benefits of the new platform.

- Pros: Lower risk compared to a full rewrite, faster time to market, and allows for the adoption of cloud benefits.

- Cons: May not resolve all technical debt and could still require significant effort to optimize for the new environment.

- U.S.-centric case example: A large U.S. insurance company moved its policy management system from an on-premise data center to a cloud platform, improving system performance and reducing hardware maintenance costs.

Re-hosting (Lift & Shift): This is the simplest and fastest modernization method, where an application is moved from its current environment to a new one, typically the cloud, with minimal changes. Think of it as simply "lifting" the application and "shifting" it to a new location. This is often the first step in a broader cloud migration strategy.

- Pros: Low cost, quick to implement, and minimizes disruption.

- Cons: The application does not fully leverage cloud-native features, and it may not improve performance or scalability significantly.

- U.S.-centric case example: A U.S. retail chain migrated its entire e-commerce platform to a new hosting provider to reduce IT overhead and improve reliability without altering the application's code.

Re-engineering: This approach involves significantly changing the application's architecture and code to rebuild it for a modern platform. This is a more complex and time-consuming process than re-hosting or re-platforming but offers the most long-term benefits. This is a form of Product Engineering Services, where you redesign the entire system.

- Pros: Greatly improves scalability, security, and performance; allows for the integration of new technologies like Generative AI Chatbots; and resolves technical debt.

- Cons: High cost and risk, longer implementation time, and requires a highly skilled team.

- U.S.-centric case example: A U.S. financial services firm re-engineered its legacy banking application into a modern microservices architecture, enabling faster feature releases and better mobile integration.

Replacement with SaaS Solutions: This involves replacing the legacy system entirely with a new, off-the-shelf Software-as-a-Service (SaaS) solution. Instead of rebuilding, the company simply buys a new system that is already maintained by a third-party vendor. This is a good option when the legacy system's functionality is a commodity and not a core differentiator.

- Pros: Faster implementation, lower initial costs, and access to new features without in-house development.

- Cons: Less control over the solution, potential vendor lock-in, and limited customization options.

- U.S.-centric case example: A small U.S. manufacturing company replaced its old, custom-built ERP system with a new cloud-based ERP SaaS solution, which simplified operations and reduced maintenance costs.

Legacy Modernization Trends & Strategies

Legacy modernization trends focus on cloud migration, AI-driven automation, microservices, and low-code/no-code platforms, while key strategies involve rehosting, refactoring, rearchitecting, and replacing systems to boost agility, reduce costs, enhance security, and integrate with modern tech, ultimately transforming outdated systems into scalable, efficient, and future-proof digital assets.

Key Trends

- Cloud-Native Architectures: Moving beyond simple cloud migration to build modular, containerized apps that scale and integrate easily.

- AI & Automation: Using AI for code analysis, bug detection, and automation to speed up modernization and reduce manual errors.

- Microservices: Decomposing large monolithic applications into smaller, manageable, independent services.

- Low-Code/No-Code: Empowering faster, more cost-effective app development and modernization by non-technical users.

- API-First Approach: Encapsulating legacy functions into APIs to unlock data and integrate with new services.

- Data Analytics & AI Integration: Embedding advanced analytics and AI directly into modernized systems for smarter operations.

Core Strategies (The 6 Rs)

- Rehosting (Lift & Shift): Moving apps to the cloud with minimal changes for quick cost savings.

- Replatforming: Making minor adjustments (e.g., moving to a new database) to leverage cloud benefits.

- Refactoring (Restructuring): Optimizing code for better performance and maintainability without changing external behavior, reducing technical debt.

- Rearchitecting/Rebuilding: Redesigning and rewriting the application from scratch using modern tech for maximum long-term benefits.

- Replacing (Retire/Buy): Sunsetting the old system and buying or building a new COTS (Commercial Off-the-Shelf) solution.

- Encapsulation: Wrapping legacy components with APIs to expose functionality to new applications.

Legacy Software Modernization and Migration Consulting for Enterprises

Modernizing legacy software is a critical priority for enterprises in 2025 as outdated systems increasingly hinder agility, increase maintenance costs, and create security vulnerabilities. Consulting services focus on transforming these aging digital assets into scalable, secure, and future-ready systems.

Key Service Offerings

- Assessment & Auditing: Comprehensive analysis of codebases, infrastructure, and business logic to identify performance gaps and security risks.

- Data Modernization: Migrating siloed or unorganized data to modern, high-latency platforms like Snowflake or BigQuery for real-time AI/BI workloads.

- UI/UX Modernization: Redesigning outdated interfaces to meet 2025 standards for user experience and mobile responsiveness.

- AI Integration: Utilizing Generative AI to automate code refactoring (e.g., converting COBOL to Java/Python) and accelerate data migration.

- DevOps & CI/CD Enablement: Implementing automated pipelines to speed up delivery cycles and improve software quality.

Top Consulting Firms & Partners

Large-scale enterprises often partner with global integrators or specialized boutiques, including:

- Global Leaders: Deloitte, Accenture, TCS, HCLTech, Kyndryl.

- Specialized Agencies: Altoros, Algoscale, Chetu, instinctools.

Measurable Business Benefits

- Cost Reduction: Modernization can reduce application maintenance and running costs by up to 50%.

- Agility: Transitioning to microservices enables faster feature releases and better adaptability to market changes.

- Security: Embedding modern encryption, zero-trust protocols, and compliance standards (GDPR, HIPAA) protects critical data.

What are the Top Considerations when Modernizing Legacy Data Systems?

Top considerations for modernizing legacy data systems include strategic goal alignment, deep assessment (risks, integrations, tech debt, security), phased approaches (Retain, Rehost, Replatform, Refactor, Rearchitect, Replace), data migration complexity, people/skills gap, integration with modern tech, and cost/ROI, all while managing downtime and ensuring compliance to future-proof the business for better efficiency, security, and scalability.

Strategic & Planning Considerations

- Define Clear Goals: Align modernization with business strategy (e.g., better performance, lower costs, enhanced security).

- Comprehensive Assessment: Map existing systems, identify technical debt, business criticality, and potential integration points (APIs).

- Cost & ROI: Evaluate Total Cost of Ownership (TCO) and potential savings from modernization.

- Phased Approach: Avoid "big bang" by breaking projects into manageable phases, often starting with essential features or API layers.

Technical & Data Considerations

- Data Migration: Plan for complex data extraction, transformation, and loading (ETL) to avoid loss or corruption.

- Integration Complexity: Address hidden dependencies and ensure new systems can communicate with existing tools.

- Security & Compliance: Modernize outdated security, authentication, and encryption to meet current standards and regulations.

- Architecture: Choose modern architectures (like microservices) for better scalability and maintainability.

People & Process Considerations

- Change Management: Plan for employee training, upskilling, and managing resistance to new workflows.

- Knowledge Gaps: Address the scarcity of developers familiar with outdated languages/platforms.

- Operational Risk: Minimize downtime and service interruptions during migration.

Agile Transformation Consulting for Legacy Software Modernization

Agile transformation consulting for legacy software modernizes old systems by integrating iterative development (Agile/DevOps) with modernization strategies (cloud, microservices, AI) to boost speed, reduce costs, improve security, and enhance adaptability, helping companies break down large projects into manageable sprints for continuous value delivery, minimal disruption, and future-proofing against tech debt.

Core Services Offered by Consultants

- System Audit & Strategy: Assess current tech debt, identify bottlenecks, and create a phased roadmap for modernization aligned with business goals.

- Iterative Development: Apply Agile/Scrum to break large projects into sprints, ensuring continuous delivery and reducing risk.

- DevOps & CI/CD: Implement automated pipelines for continuous integration and deployment, ensuring smooth, disruption-free updates.

- Cloud Migration: Move applications to cloud-native platforms (Kubernetes, serverless) for scalability and flexibility.

- Microservices & Re-architecting: Decompose monoliths into smaller, independent services for agile development and scaling.

- AI Integration: Embed AI for automation, predictive analytics, and enhanced decision-making within legacy systems.

- UI/UX Modernization: Improve user adoption and efficiency through modern interfaces.

Key Benefits

- Increased Agility: Adapt faster to market changes.

- Reduced Costs: Lower maintenance overhead and technical debt.

- Improved Performance & Security: Optimize applications and implement modern security practices.

- Enhanced Productivity: Access to new technologies and streamlined workflows.

How They Do It (The Agile Way)

- Phased Approach: Tackle modernization module by module to minimize business disruption.

- Value-Driven: Focus on delivering tangible business value in short cycles (sprints).

- Continuous Improvement: Foster a culture of ongoing enhancement and feedback.

Benefits of Legacy System Modernization

Modernizing legacy systems is a crucial step for businesses looking to stay competitive in today's fast-paced digital landscape. It involves updating outdated software, applications, and infrastructure to leverage modern technology, which provides a significant return on investment. The process goes beyond a simple upgrade; it's a strategic move to address fundamental issues like inefficiency, high maintenance costs, and a lack of flexibility.

By modernizing, companies can transform their IT environment into a more dynamic and resilient asset that supports long-term growth and innovation.

Key Benefits of Legacy System Modernization

- Scalability and Agility:

- Legacy systems often struggle to handle growing data volumes or user demands. Modernized systems, built on cloud-native architectures or microservices, can scale resources up or down automatically.

- This agility allows businesses to respond quickly to market changes, new opportunities, or sudden increases in user traffic without the need for expensive hardware investments.

- Enhanced Security and Compliance:

- Older systems are a prime target for cyberattacks because they lack modern security features and patches. They often have unaddressed vulnerabilities that can lead to data breaches.

- Modernization integrates robust security protocols, such as end-to-end encryption, multi-factor authentication, and regular security updates, to protect sensitive data and ensure compliance with industry regulations like GDPR or HIPAA.

- Cost Optimization:

- The high cost of maintaining legacy systems, including specialized hardware, obsolete software licenses, and skilled support staff, can be a major drain on resources.

- By migrating to modern, often cloud-based, platforms, companies can reduce operational costs, lower energy consumption, and shift from capital expenditures to a more predictable and scalable operational expenditure model.

- Future-proofing IT Investments:

- Modernizing your technology stack ensures that your IT infrastructure can adapt to future innovations.

- This protects your initial investment by making it easier to integrate emerging technologies like Generative AI Chatbots or to leverage Product Engineering Services for building new features or applications. This also improves the overall Web App Development process.

Challenges in Legacy System Modernization

Embarking on a legacy system modernization project can be a complex undertaking, fraught with significant challenges. While the potential rewards, like improved efficiency and enhanced capabilities, are substantial, businesses must navigate several key hurdles to ensure a successful transition.

These challenges often revolve around technical complexities, human factors, and the intricate web of existing IT infrastructure.

- Data Migration Risks: Moving data from an old system to a new one is a critical and often risky part of modernization. A major challenge is ensuring data integrity, which means all data must be transferred completely and without corruption. There's also the risk of data loss or inconsistency during the migration process, which can lead to operational issues and inaccurate reporting in the new system.

- Employee Adoption: A new system, no matter how advanced, is only as good as its user base. Resistance to change is a common hurdle, as employees may be accustomed to the old system's workflows and hesitant to learn new processes. A lack of proper training or insufficient communication about the benefits of the new system can lead to low adoption rates and a decline in productivity as teams struggle to adapt.

- Integration with Existing Tools: Legacy systems rarely operate in a vacuum. They are often interconnected with other applications and tools that are essential for business operations. Modernizing one system requires careful planning to ensure seamless integration with all other existing software. This can be challenging due to outdated APIs, incompatible data formats, or a lack of documentation for the legacy system, which can create communication breakdowns and operational silos.

Best Practices for Legacy System Modernization

To effectively modernize a legacy system in the U.S., a well-defined strategy is essential for navigating the complex process of transitioning from outdated technology to modern, efficient platforms.

These strategies focus on minimizing risk, maximizing value, and ensuring a smooth migration.

- Cloud-First Strategy: Adopting a cloud-first strategy is a fundamental best practice for modernization. This approach prioritizes migrating applications and infrastructure to cloud platforms like AWS, Azure, or Google Cloud. The cloud offers scalability, cost-effectiveness, and enhanced security that legacy systems often lack. By leveraging cloud-native services, organizations can improve performance, reduce maintenance overhead, and accelerate innovation.

- Agile and DevOps Adoption : Implementing Agile and DevOps methodologies is crucial for modernizing at scale. Agile principles, such as iterative development and continuous feedback, help teams adapt to changes and deliver value incrementally. DevOps practices, including continuous integration (CI) and continuous delivery (CD), automate the software development lifecycle. This automation speeds up deployment, reduces errors, and fosters better collaboration between development and operations teams.

- Partnering with Modernization Experts: Collaborating with specialized modernization experts can significantly streamline the process. These partners bring deep expertise in legacy systems, modern technologies, and migration strategies. They can provide valuable insights, help create a tailored roadmap, and mitigate potential risks. This partnership ensures the project stays on track, avoids common pitfalls, and delivers the desired business outcomes efficiently.

- Continuous Monitoring and Optimization: After modernization, the work doesn't stop. Continuous monitoring and optimization are vital for long-term success. Monitoring tools provide real-time visibility into the performance, security, and health of the new system. By continuously analyzing data, organizations can identify bottlenecks, optimize resource usage, and make data-driven decisions. This ongoing process ensures the modernized system remains high-performing, secure, and aligned with business needs.

Case Studies: Successful Legacy Modernization in U.S.

Here are some real-world case studies of successful legacy modernization in U.S. enterprises.

Banking/Finance

Banks and financial institutions often rely on decades-old mainframe systems. Modernizing these systems helps them offer new digital services, improve security, and respond to market changes more quickly.

- Capital One: This diverse financial services company began a significant legacy modernization journey in 2011, adopting a cloud-first strategy. They migrated their legacy applications and data to the cloud and re-architected them into microservices. This helped them utilize artificial intelligence and machine learning to enhance customer experience, detect fraud, and manage risk. The result was a reduction in IT costs, improved operational efficiency, and a huge boost in innovation.

- HCLTech and a Federal Credit Union: HCLTech partnered with one of the largest federal credit unions in the world to modernize its legacy applications. The goal was to move to a more efficient system that would improve the customer experience and deliver a better return on investment. The solution involved migrating the client's sales, customer service, and employee functions to the Salesforce Financial Service Cloud. The project established a modern DevOps pipeline for continuous integration and delivery, which allowed for real-time deployment changes and better software quality.

Healthcare

In healthcare, legacy systems can be a serious barrier to providing modern patient care. Modernization efforts focus on improving data interoperability, enhancing security, and enabling new services like telemedicine.

- Medsphere Systems Corporation: This healthcare IT solutions company partnered with a firm to modernize its legacy inventory and supply chain management system. The outdated system was a roadblock to efficiency and limited their ability to integrate with modern healthcare standards like HIPAA. The modernization project created a new, advanced solution that streamlined operations, improved security, and made it easier for Medsphere to expand its services to larger hospital systems. The company saw a 20% reduction in training time for the new system, which helped boost customer satisfaction and adoption rates.

- Optum (an HCLTech Case Study): A large managed healthcare company, which is one of the biggest in the world, had a more than 20-year-old monolithic legacy application for child support services. The application had an outdated user interface and slow financial processes. HCLTech was hired to transform and modernize this application. They used their proprietary tools to convert the legacy source code to a new platform. This transformation led to significant improvements, including a 60% savings in application development effort and better overall maintainability of the system.

Future Trends in Legacy System Modernization

Legacy system modernization is becoming more critical as businesses look to stay competitive. The future of this field is being shaped by several key trends, moving away from simple upgrades to more strategic, technology-driven transformations.

- AI-Driven Modernization: Artificial intelligence is changing how companies approach legacy systems. AI and machine learning tools can automate the analysis of old code, identify dependencies, and even help in rewriting or refactoring it. This speeds up the process, reduces human error, and allows teams to focus on more complex tasks. For example, AI can predict which parts of a system will be most difficult to modernize, helping project managers plan more effectively. This approach makes modernization projects faster and more cost-effective.

- Low-Code/No-Code Adoption: Platforms that use low-code and no-code (LCNC) development are making modernization more accessible. These tools allow businesses to build new applications or wrap existing legacy functions with minimal or no manual coding. Instead of a complete rewrite, companies can use LCNC platforms to create modern interfaces and workflows on top of their older systems. This approach accelerates time-to-market and allows business users who aren't developers to participate in the modernization process. It bridges the gap between old and new technology more quickly.

- API-First Ecosystems: A major shift in modernization is the move toward API-first architectures. Instead of a monolithic structure, legacy systems are being broken down into smaller, reusable services that communicate through APIs (Application Programming Interfaces). This strategy, which is a core part of Product Engineering Services, allows companies to unlock the value of their old data and business logic. By exposing legacy functions through APIs, businesses can integrate their systems with new cloud-native applications, partner services, and Web App Development projects. This creates a flexible, scalable, and interconnected ecosystem that is much easier to manage and update.

What's Next

Legacy system modernization is no longer optional for U.S. enterprises, it’s a business necessity. By transforming outdated systems into cloud-ready, secure, and scalable platforms, organizations can stay competitive, meet compliance standards, and deliver digital-first experiences.

For IT leaders, the time to act is now, modernization is the foundation of future growth and innovation.